Introduction

Floresta is a collection of Rust libraries designed for building a Bitcoin full node, alongside the assembled daemon and RPC client binaries, all developed by Davidson Souza.

A key feature of Floresta is its use of the utreexo accumulator to maintain the UTXO set in a highly compact format. It also incorporates innovative techniques to significantly reduce Initial Block Download (IBD) times with minimal security tradeoffs.

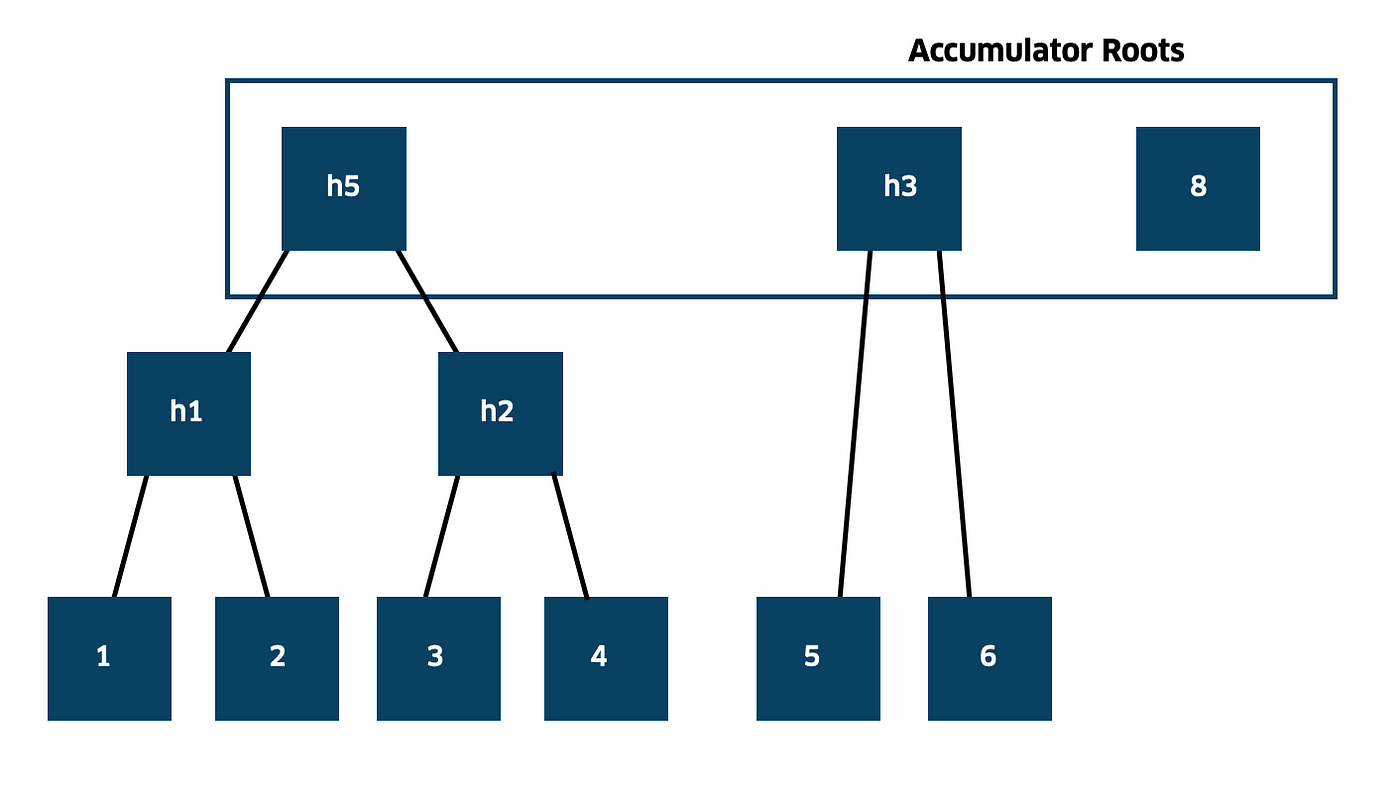

The Utreexo accumulator consists of a forest of merkle trees where leaves are individual UTXOs, thus the name U-Tree-XO. The UTXO set at any moment is represented as the merkle roots of the forest.

The novelty in this cryptographic system is a mechanism to update the forest, both adding new UTXOs and deleting existing ones from the set. When a transaction spends UTXOs we can verify it with an inclusion proof, and then delete those specific UTXOs from the set.

Currently, the node can only operate in pruned mode, meaning it deletes block data after validation. Combined with utreexo, this design keeps storage requirements exceptionally low (< 1 GB).

The ultimate vision for Floresta is to deliver a reliable and ultra-lightweight node implementation capable of running on low-resource devices, democratizing the access to the Bitcoin blockchain. However, keep in mind that Floresta remains highly experimental software ⚠️.

About This Book

This documentation provides an overview of the process involved in creating and running a Floresta node (i.e., a UtreexoNode). We start by examining the project's overall structure, which is necessary to build a foundation for understanding its internal workings.

The book will contain plenty of code snippets, which are identical to Floresta code sections. Each snippet includes a commented path referencing its corresponding file, visible by clicking the eye icon in the top-right corner of the snippet (e.g., // Path: floresta-chain/src/lib.rs).

You will also find some interactive quizzes to test and reinforce your understanding of Floresta!

Note on Types

To avoid repetitive explanations, this documentation follows a simple convention: unless otherwise stated any mentioned type is assumed to come from the bitcoin crate, which provides many of the building blocks for Floresta. If you see a type, and we don't mention its source, you can assume it's a bitcoin type.

Project Overview

The Floresta project is made up of a few library crates, providing modular node and application components, and two binaries: florestad, the Floresta daemon (i.e., the assembled node implementation), and floresta-cli, the command-line interface for florestad.

Developers can use the core components in the libraries to build their own wallets and node implementations. They can use individual libraries or use the whole pack of components with the floresta meta-crate, which just re-exports libraries.

Components of Floresta

At the heart of Floresta are two fundamental libraries:

floresta-chain, which validates the blockchain and maintains node state.floresta-wire, which connects to the Bitcoin network, fetching transactions and blocks to advance the chain.

A useful way to picture the role of these two libraries is with a car analogy:

A full node is like a self-driving car that must keep up with a constantly moving destination, as new blocks are produced. The Bitcoin network is the road.

floresta-wireis the car's sensors and navigation, reading the road ahead.floresta-chainis the engine and control system, deciding how to move forward. Withoutfloresta-wire, the engine has no data to act on; withoutfloresta-chain, the car cannot move.

These two crates share building blocks from floresta-common (a small common library). Together, they form the minimum needed for a functioning node. On top of them, utilities extend functionality:

floresta-watch-only: a watch-only wallet backend for tracking addresses and balances.floresta-compact-filters: builds and queries BIP-158 filters to speed wallet rescans and enable UTXO lookups. This is especially useful as Floresta is fully pruned.floresta-electrum: an Electrum server that answers wallet-oriented queries (headers, balances, UTXOs, transactions) for external clients.

Finally, we find a meta-crate that re-exports these components, and two libraries that power the floresta-cli and florestad binaries:

floresta- A meta-crate that re-exports the previous modular components.

floresta-rpc- Provides the JSON-RPC API and types used by the CLI, powering

floresta-cli.

- Provides the JSON-RPC API and types used by the CLI, powering

floresta-node- Implements the node functionality by assembling all the components. This crate is the "glue layer" between the modular crates and the daemon binary (

florestadwill just invoke the logic implemented here).

- Implements the node functionality by assembling all the components. This crate is the "glue layer" between the modular crates and the daemon binary (

UtreexoNode

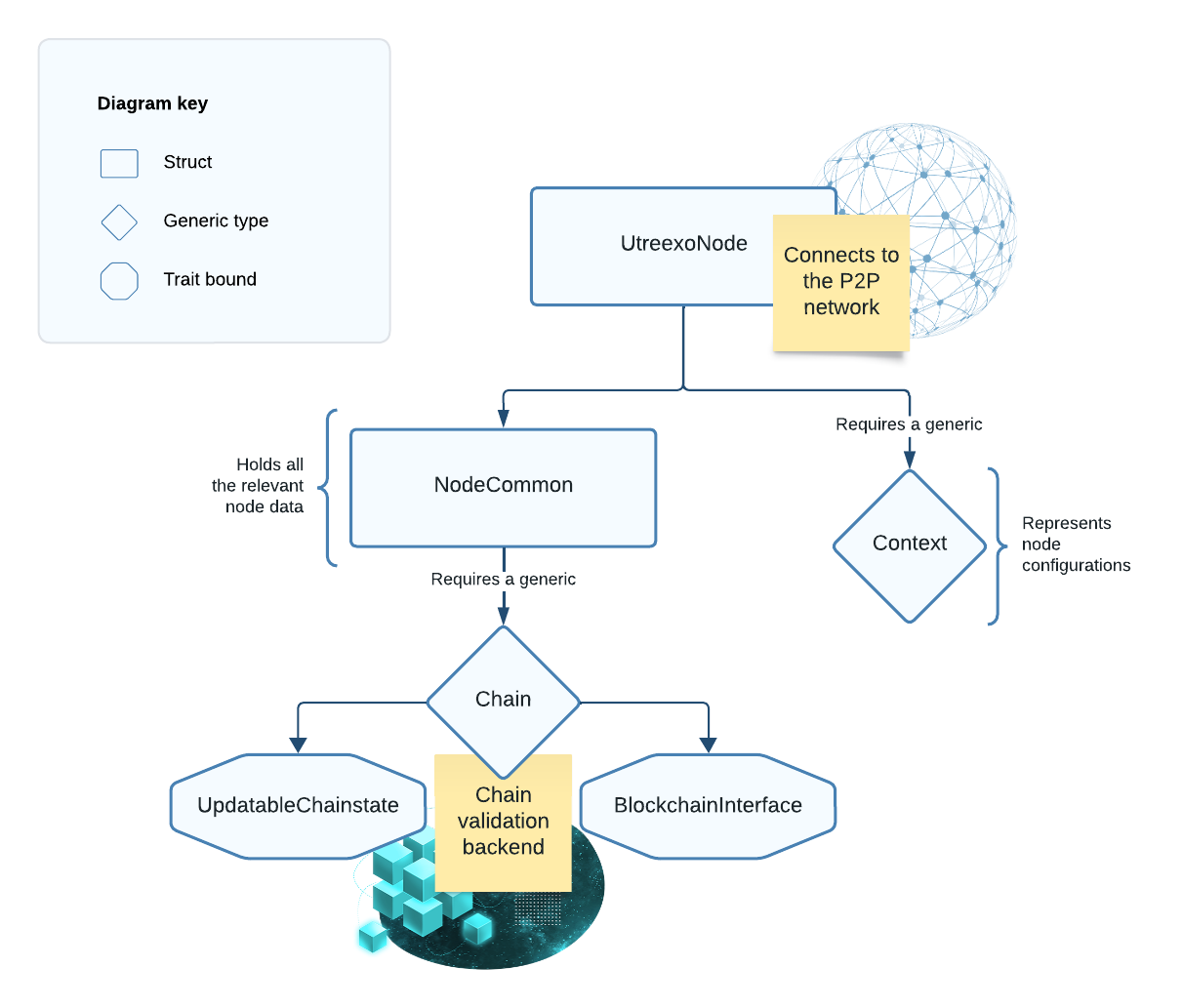

UtreexoNode is the top-level type in Floresta, responsible for managing P2P connections, receiving network data, and broadcasting transactions. All its logic is found at the floresta-wire crate.

Blocks fetched by UtreexoNode are passed to a blockchain backend for validation and state tracking. This backend is represented by a generic Chain type. Additionally, UtreexoNode relies on a separate generic Context type to provide context-specific behavior. The default Context is RunningNode, which handles the transition between other contexts (it's the highest level context).

Figure 1: Diagram of the UtreexoNode type.

Below is the actual type definition, which is a struct with two fields and trait bounds for the Chain backend.

Filename: floresta-wire/src/p2p_wire/node.rs

// Path: floresta-wire/src/p2p_wire/node.rs

pub struct UtreexoNode<Chain: ChainBackend, Context = RunningNode> {

pub(crate) common: NodeCommon<Chain>,

pub(crate) context: Context,

}The Chain backend must implement the ChainBackend trait, which is just shorthand for the two key traits that define the backend.

// Path: floresta-chain/src/pruned_utreexo/mod.rs

pub trait ChainBackend: BlockchainInterface + UpdatableChainstate {}Each trait defines a distinct responsibility of our blockchain backend:

UpdatableChainstate: Methods to update the state of the blockchain backend.BlockchainInterface: Defines the interface for interacting with other components, such asUtreexoNode.

Both traits are located in the floresta-chain library crate, in src/pruned_utreexo/mod.rs.

In the next section, we will explore the required API for these traits.

Chain Backend API

We will now take a look at the API that the Chain backend (from UtreexoNode) is required to expose, as part of the BlockchainInterface and UpdatableChainstate traits.

The lists below are only meant to provide an initial sense of the expected chain API.

The BlockchainInterface Trait

The BlockchainInterface methods are mainly about getting information from the current view of the blockchain and state of validation.

It defines a generic associated error type bounded by the std::error::Error trait—or, in no-std environments, by Floresta's own minimal Error marker trait—so each BlockchainInterface implementation can pick its own error type.

The list of required methods:

get_block_hash, given a u32 height.get_tx, given its txid.get_heightof the chain.broadcasta transaction to the network.estimate_feefor inclusion in usize target blocks.get_block, given its hash.get_best_blockhash and height.get_block_header, given its hash.is_in_ibd, whether we are in Initial Block Download (IBD) or not.get_unbroadcastedtransactions.is_coinbase_mature, given its block hash and height (on the mainchain, coinbase transactions mature after 100 blocks).get_block_locator, i.e., a compact list of block hashes used to efficiently identify the most recent common point in the blockchain between two nodes for synchronization purposes.get_block_locator_for_tip, given the hash of the tip block. This can be used for tips that are not canonical or best.get_validation_index, i.e., the height of the last block we have validated.get_block_height, given its block hash.get_chain_tipsblock hashes, including the best tip and non-canonical ones.get_fork_point, to get the block hash where a given branch forks (the branch is represented by its tip block hash).get_params, to get the parameters for chain consensus.acc, to get the current utreexo accumulator.

Also, we have a subscribe method which allows other components to receive notifications of new validated blocks from the blockchain backend.

Filename: floresta-chain/src/pruned_utreexo/mod.rs

// Path: floresta-chain/src/pruned_utreexo/mod.rs

pub trait BlockchainInterface {

type Error: Error + Send + Sync + 'static;

// ...

fn get_block_hash(&self, height: u32) -> Result<bitcoin::BlockHash, Self::Error>;

fn get_tx(&self, txid: &bitcoin::Txid) -> Result<Option<bitcoin::Transaction>, Self::Error>;

fn get_height(&self) -> Result<u32, Self::Error>;

fn broadcast(&self, tx: &bitcoin::Transaction) -> Result<(), Self::Error>;

fn estimate_fee(&self, target: usize) -> Result<f64, Self::Error>;

fn get_block(&self, hash: &BlockHash) -> Result<Block, Self::Error>;

fn get_best_block(&self) -> Result<(u32, BlockHash), Self::Error>;

fn get_block_header(&self, hash: &BlockHash) -> Result<BlockHeader, Self::Error>;

fn subscribe(&self, tx: Arc<dyn BlockConsumer>);

// ...

fn is_in_ibd(&self) -> bool;

fn get_unbroadcasted(&self) -> Vec<Transaction>;

fn is_coinbase_mature(&self, height: u32, block: BlockHash) -> Result<bool, Self::Error>;

fn get_block_locator(&self) -> Result<Vec<BlockHash>, Self::Error>;

fn get_block_locator_for_tip(&self, tip: BlockHash) -> Result<Vec<BlockHash>, BlockchainError>;

fn get_validation_index(&self) -> Result<u32, Self::Error>;

fn get_block_height(&self, hash: &BlockHash) -> Result<Option<u32>, Self::Error>;

fn update_acc(

&self,

acc: Stump,

block: Block,

height: u32,

proof: Proof,

del_hashes: Vec<sha256::Hash>,

) -> Result<Stump, Self::Error>;

fn get_chain_tips(&self) -> Result<Vec<BlockHash>, Self::Error>;

fn validate_block(

&self,

block: &Block,

proof: Proof,

inputs: HashMap<OutPoint, UtxoData>,

del_hashes: Vec<sha256::Hash>,

acc: Stump,

) -> Result<(), Self::Error>;

fn get_fork_point(&self, block: BlockHash) -> Result<BlockHash, Self::Error>;

fn get_params(&self) -> bitcoin::params::Params;

fn acc(&self) -> Stump;

}// Path: floresta-chain/src/pruned_utreexo/mod.rs

pub enum Notification {

NewBlock((Block, u32)),

}Any type that implements the BlockConsumer trait can subscribe to our BlockchainInterface by passing a reference of itself, and receive notifications of new blocks (including block data and height). In the future this can be extended to also notify transactions.

Validation Methods

Finally, there are two validation methods that do NOT update the node state:

update_acc, to get the new accumulator after applying a new block. It requires the current accumulator, the new block data, the inclusion proof for the spent UTXOs, and the hashes of the spent UTXOs.validate_block, which instead of only verifying the inclusion proof, validates the whole block (including its transactions, for which the spent UTXOs themselves are needed).

The UpdatableChainstate Trait

On the other hand, the methods required by UpdatableChainstate are expected to update the node state.

These methods use the BlockchainError enum, found in pruned_utreexo/error.rs. Each variant of BlockchainError represents a kind of error that is expected to occur (block validation errors, invalid utreexo proofs, etc.). The UpdatableChainstate methods are:

Very important

connect_block: Takes a block and utreexo data, validates the block and adds it to our chain.accept_header: Checks a header and saves it in storage. This is called beforeconnect_block, which is responsible for accepting or rejecting the actual block.

// Path: floresta-chain/src/pruned_utreexo/mod.rs

pub trait UpdatableChainstate {

fn connect_block(

&self,

block: &Block,

proof: Proof,

inputs: HashMap<OutPoint, UtxoData>,

del_hashes: Vec<sha256::Hash>,

) -> Result<u32, BlockchainError>;

// ...

fn switch_chain(&self, new_tip: BlockHash) -> Result<(), BlockchainError>;

fn accept_header(&self, header: BlockHeader) -> Result<(), BlockchainError>;

// ...

fn handle_transaction(&self) -> Result<(), BlockchainError>;

fn flush(&self) -> Result<(), BlockchainError>;

fn toggle_ibd(&self, is_ibd: bool);

fn invalidate_block(&self, block: BlockHash) -> Result<(), BlockchainError>;

fn mark_block_as_valid(&self, block: BlockHash) -> Result<(), BlockchainError>;

fn get_root_hashes(&self) -> Vec<BitcoinNodeHash>;

fn get_partial_chain(

&self,

initial_height: u32,

final_height: u32,

acc: Stump,

) -> Result<PartialChainState, BlockchainError>;

fn mark_chain_as_assumed(&self, acc: Stump, tip: BlockHash) -> Result<bool, BlockchainError>;

fn get_acc(&self) -> Stump;

}Usually, in IBD we fetch a chain of headers with sufficient PoW first, and only then do we ask for the block data (i.e., the transactions) in order to verify the blocks. This way we ensure that DoS attacks sending our node invalid blocks, with the purpose of wasting our resources, are costly because of the required PoW.

Others

switch_chain: Reorg to another branch, given its tip block hash.handle_transaction: Process transactions that are in the mempool.flush: Writes pending data to storage. Should be invoked periodically.toggle_ibd: Toggle the IBD process on/off.invalidate_block: Tells the blockchain backend to consider this block invalid.mark_block_as_valid: Overrides a block that was marked as invalid, considering it as fully validated.get_root_hashes: Returns the root hashes of our utreexo accumulator.get_partial_chain: Returns aPartialChainState(a Floresta type allowing to validate parts of the chain in parallel, explained in Chapter 5), given the height range and the initial utreexo state.mark_chain_as_assumed: Given a block hash and the corresponding accumulator, assume every ancestor block is valid.get_acc: Returns the current accumulator.

Using ChainState as Chain Backend

Recall that

UtreexoNodeis the high-level component of Floresta that will interact with the P2P network. It is made of itsContextand aNodeCommonthat holds aChainbackend (for validation and state tracking). This and the following chapters will focus solely on the Floresta chain backend.

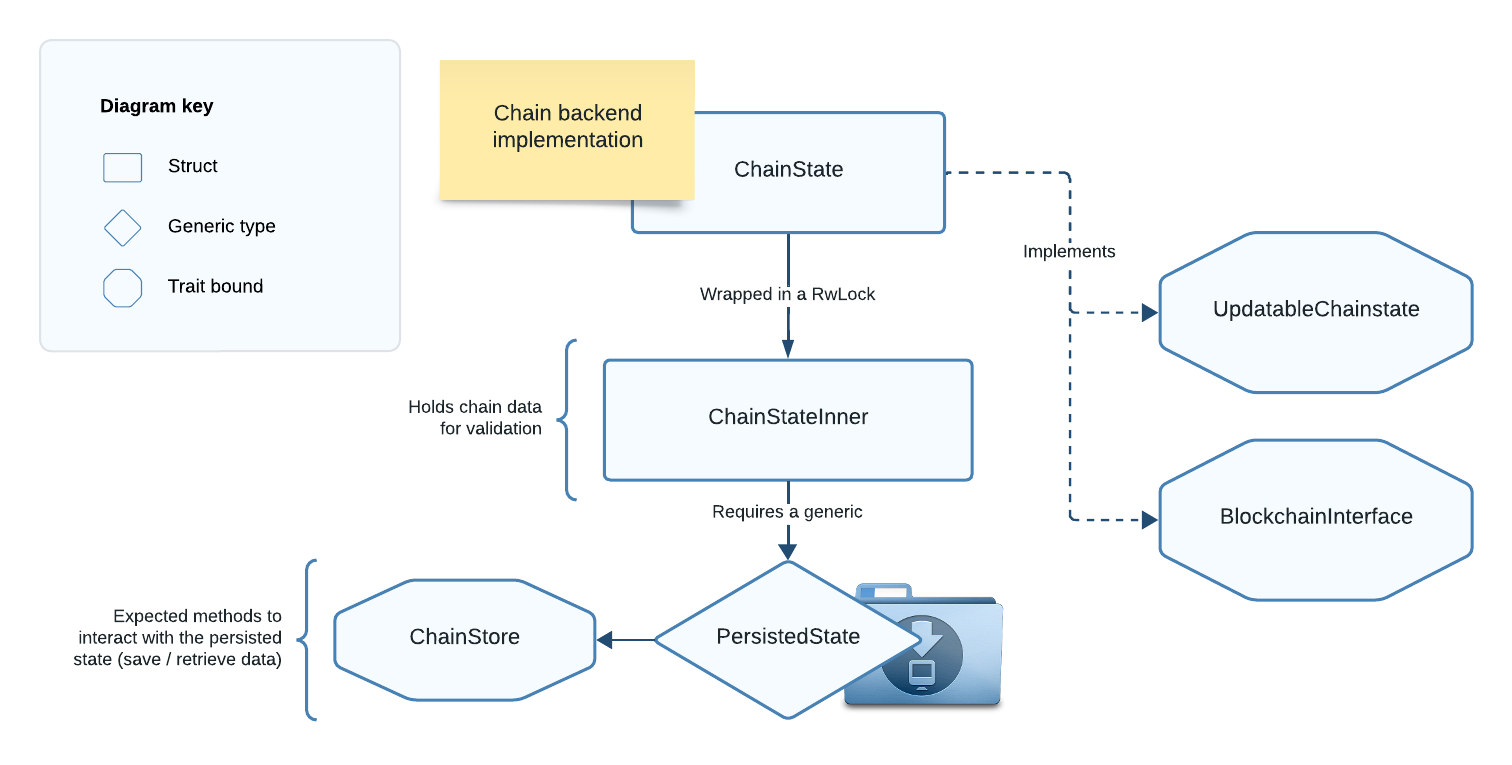

In this chapter we will learn about ChainState, a type that implements UpdatableChainstate + BlockchainInterface, so we can use it as Chain backend.

ChainState is the default blockchain backend provided by Floresta, with all its logic encapsulated within the floresta-chain library.

The following associations clarify the module structure:

UtreexoNodedeclaration and logic resides infloresta-wireChainStatedeclaration and logic resides infloresta-chain

ChainState Structure

The job of

ChainStateis to validate blocks, update the state and store it.

For a node to keep track of the chain state effectively, it is important to ensure the state is persisted to some sort of storage system. This way the node progress is saved, and we can recover it after the device is turned off.

That's why ChainState uses a generic PersistedState type, bounded by the ChainStore trait, which defines how we interact with our persistent state database.

Figure 2: Diagram of the ChainState type.

Filename: pruned_utreexo/chain_state.rs

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

pub struct ChainState<PersistedState: ChainStore> {

inner: RwLock<ChainStateInner<PersistedState>>,

}// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

impl<PersistedState: ChainStore> BlockchainInterface for ChainState<PersistedState> {

// Implementation of BlockchainInterface for ChainState// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

impl<PersistedState: ChainStore> UpdatableChainstate for ChainState<PersistedState> {

// Implementation of UpdatableChainstate for ChainStateAs Floresta is currently only pruned, the expected database primarily consists of the block header chain and the utreexo accumulator; blocks themselves are not stored.

The default implementation of ChainStore is FlatChainStore, but we also provide a simpler KvChainStore implementation. This means that developers may:

- Implement custom

UpdatableChainstate + BlockchainInterfacetypes for use asChainbackends. - Use the provided

ChainStatebackend:- With their own

ChainStoreimplementation. - Or the provided

FlatChainStoreorKvChainStoreimplementations.

- With their own

Next, let’s build the ChainState struct step by step!

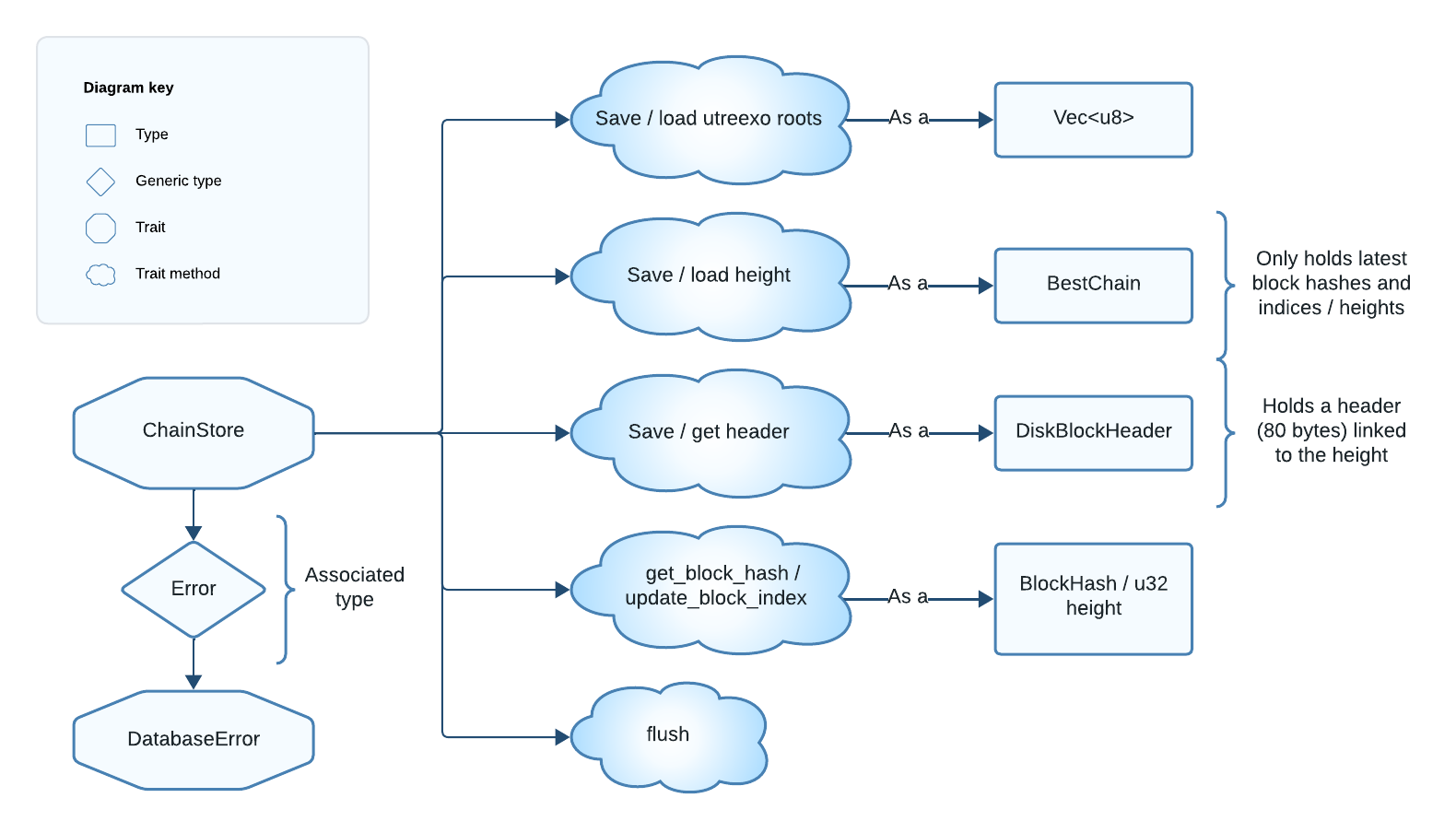

The ChainStore Trait

ChainStoreis a trait that abstracts the persistent storage layer for the FlorestaChainStatebackend.

To create a ChainState, we start by building its ChainStore implementation.

ChainStore API

The methods required by ChainStore, designed for interaction with persistent storage, are:

save_roots_for_block/load_roots_for_block: Save or load the utreexo accumulator (merkle roots) that results after processing a particular block.save_height/load_height: Save or load the current chain tip data.save_header/get_header: Save or retrieve a block header by itsBlockHash.get_block_hash/update_block_index: Retrieve or associate aBlockHashwith a chain height.flush: Immediately persist saved data still in memory. This ensures data recovery in case of a crash.check_integrity: Performs a database integrity check. This can be a no-op if our implementation leverages a database crate that ensures integrity.

In other words, the implementation of these methods should allow us to save and load:

- The accumulator for each block (serialized as a

Vec<u8>), such that we can reorg our UTXO set if a fork becomes the best chain. - The current chain tip data (as

BestChain). - Block headers (as

DiskBlockHeader), associated to the block hash. - Block hashes (as

BlockHash), associated with a height.

BestChain and DiskBlockHeader are important Floresta types that we will see in a minute. DiskBlockHeader represents stored block headers, while BestChain tracks the chain tip metadata.

With this data we have a pruned view of the blockchain, metadata about the chain we are in, and the compact UTXO set (the utreexo accumulator).

Figure 3: Diagram of the ChainStore trait.

ChainStore also has an associated Error type for the methods:

Filename: pruned_utreexo/chainstore.rs

// Path: floresta-chain/src/pruned_utreexo/chainstore.rs

pub trait ChainStore {

type Error: DatabaseError;

fn save_roots_for_block(&mut self, roots: Vec<u8>, height: u32) -> Result<(), Self::Error>;

// ...

fn load_roots_for_block(&mut self, height: u32) -> Result<Option<Vec<u8>>, Self::Error>;

fn load_height(&self) -> Result<Option<BestChain>, Self::Error>;

fn save_height(&mut self, height: &BestChain) -> Result<(), Self::Error>;

fn get_header(&self, block_hash: &BlockHash) -> Result<Option<DiskBlockHeader>, Self::Error>;

fn get_header_by_height(&self, height: u32) -> Result<Option<DiskBlockHeader>, Self::Error>;

fn save_header(&mut self, header: &DiskBlockHeader) -> Result<(), Self::Error>;

fn get_block_hash(&self, height: u32) -> Result<Option<BlockHash>, Self::Error>;

fn flush(&mut self) -> Result<(), Self::Error>;

fn update_block_index(&mut self, height: u32, hash: BlockHash) -> Result<(), Self::Error>;

fn check_integrity(&self) -> Result<(), Self::Error>;

}Hence, implementations of ChainStore are free to use any error type as long as it implements DatabaseError. This is just a marker trait that can be automatically implemented on any T: std::error::Error + std::fmt::Display. This flexibility allows compatibility with different database implementations.

Now, let's do a brief overview of the two provided ChainStore implementations.

FlatChainStore and KvChainStore

Floresta currently offers two ChainStore implementations. The first available implementation, and by far the simplest one, is KvChainStore, which wraps the kv crate (itself a thin layer over sled) to provide a key-value embedded database store.

The second one is FlatChainStore, which replaced KvChainStore as the default store. Nowadays, florestad will compile by default with this store, but you can still use the old KvChainStore if you compile it with --no-default-features --features kv-chainstore. However, FlatChainStore was designed to deliver optimal performance, especially on low-resource devices like smartphones.

Instead of using key-value buckets, FlatChainStore keeps all the data in raw .bin files. Then, we create a memory-map that allows us to read and write to these files as if they were in memory. Once initialized, it has the following directory structure:

chaindata/

├─ headers.bin # mmap‑ed header vector

├─ fork_headers.bin # mmap‑ed header vector for fork chains

├─ blocks_index.bin # mmap‑ed vector<u32>, accessed via a hash‑map linking block hashes to heights

│

├─ accumulators.bin # plain file (roots blob, var‑len records)

└─ metadata.bin # mmap‑ed Metadata struct (version, checksums, file lengths...)For more detailed information about FlatChainStore, see Apendix A.

And that's all for this section! Next we will see two important types used to store and retrieve data via the ChainStore methods: BestChain and DiskBlockHeader.

BestChain and DiskBlockHeader

As previously mentioned, BestChain and DiskBlockHeader are Floresta types used for storing and retrieving data in the ChainStore database.

DiskBlockHeader

We use a custom DiskBlockHeader instead of the direct bitcoin::block::Header to add some metadata:

Filename: pruned_utreexo/chainstore.rs

// Path: floresta-chain/src/pruned_utreexo/chainstore.rs

// BlockHeader is an alias for bitcoin::block::Header

pub enum DiskBlockHeader {

FullyValid(BlockHeader, u32),

AssumedValid(BlockHeader, u32),

Orphan(BlockHeader),

HeadersOnly(BlockHeader, u32),

InFork(BlockHeader, u32),

InvalidChain(BlockHeader),

}DiskBlockHeader not only holds a header but also encodes possible block states, as well as the height when it makes sense.

When we start downloading headers in IBD we save them as HeadersOnly. If a header doesn't have a parent, it's saved as Orphan. If it's not in the best chain, InFork. And when we validate the actual blocks we should be able to mark the headers as FullyValid.

Also, we have AssumeValid for a configuration that allows the node to skip script validation, and InvalidChain for cases when UpdatableChainstate::invalidate_block is called.

BestChain

The BestChain struct is an internal representation of the chain we are in and has the following fields:

best_block: The current best chain's lastBlockHash(the actual block may or may not have been validated yet).depth: The number of blocks pilled after the genesis block (i.e., the height of the tip).validation_index: TheBlockHashup to which we have validated the chain.alternative_tips: A vector of fork tipBlockHashes with a chance of becoming the best chain.

Filename: pruned_utreexo/chainstore.rs

// Path: floresta-chain/src/pruned_utreexo/chainstore.rs

pub struct BestChain {

pub best_block: BlockHash,

pub depth: u32,

pub validation_index: BlockHash,

pub alternative_tips: Vec<BlockHash>,

}Building the ChainState

The next step is building the ChainState struct, which validates blocks and updates the ChainStore.

Filename: pruned_utreexo/chain_state.rs

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

pub struct ChainState<PersistedState: ChainStore> {

inner: RwLock<ChainStateInner<PersistedState>>,

}Note that the RwLock that we use to wrap ChainStateInner is not the one from the standard library but from the spin crate, thus allowing no_std.

std::sync::RwLockrelies on the OS to block and wake threads when the lock is available, whilespin::RwLockuses a spinlock which does not require OS support for thread management, as the thread simply keeps running (and checking for lock availability) instead of sleeping.

The builder for ChainState has this signature:

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

pub fn new(

mut chainstore: PersistedState,

network: Network,

assume_valid: AssumeValidArg,

) -> ChainState<PersistedState> {The first argument is our ChainStore implementation, the second one is the Network enum from the bitcoin crate, and thirdly the AssumeValidArg enum.

The Network enum acknowledges four kinds of networks: Bitcoin (mainchain), Testnet (version 3), Testnet4, Signet and Regtest.

The Assume-Valid Lore

The assume_valid argument refers to a Bitcoin Core option that allows nodes during IBD to assume the validity of scripts (mainly signatures) up to a certain block.

Nodes with this option enabled will still choose the most PoW chain (the best tip), and will only skip script validation if the Assume-Valid block is in that chain. Otherwise, if the Assume-Valid block is not in the best chain, they will validate everything.

When users use the default

Assume-Validhash, hardcoded in the software, they aren't blindly trusting script validity. These hashes are reviewed through the same open-source process as other security-critical changes in Bitcoin Core, so the trust model is unchanged.

In Bitcoin Core, the hardcoded Assume-Valid block hash is included in src/kernel/chainparams.cpp.

Filename: pruned_utreexo/chain_state.rs

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

pub enum AssumeValidArg {

Disabled,

Hardcoded,

UserInput(BlockHash),

}Disabled means the node verifies all scripts, Hardcoded means the node uses the default block hash that has been hardcoded in the software (and validated by maintainers, developers and reviewers), and UserInput means using a hash that the node runner provides, although the validity of the scripts up to this block should have been externally validated.

Genesis and Assume-Valid Blocks

The first part of the body of ChainState::new (let's omit the impl block from now on):

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

pub fn new(

mut chainstore: PersistedState,

network: Network,

assume_valid: AssumeValidArg,

) -> ChainState<PersistedState> {

let parameters = network.into();

let genesis = genesis_block(¶meters);

chainstore

.save_header(&DiskBlockHeader::FullyValid(genesis.header, 0))

.expect("Error while saving genesis");

chainstore

.update_block_index(0, genesis.block_hash())

.expect("Error updating index");

let assume_valid = ChainParams::get_assume_valid(network, assume_valid);

// ...

ChainState {

inner: RwLock::new(ChainStateInner {

chainstore,

acc: Stump::new(),

best_block: BestChain {

best_block: genesis.block_hash(),

depth: 0,

validation_index: genesis.block_hash(),

alternative_tips: Vec::new(),

},

broadcast_queue: Vec::new(),

subscribers: Vec::new(),

fee_estimation: (1_f64, 1_f64, 1_f64),

ibd: true,

consensus: Consensus { parameters },

assume_valid,

}),

}

}First, we use the genesis_block function from bitcoin to retrieve the genesis block based on the specified parameters, which are determined by the Network kind.

Then we save the genesis header into chainstore, which of course is FullyValid and has height 0. We also link the index 0 with the genesis block hash.

Finally, we get an Option<BlockHash> by calling the ChainParams::get_assume_valid function, which takes a Network and an AssumeValidArg.

Filename: pruned_utreexo/chainparams.rs

// Path: floresta-chain/src/pruned_utreexo/chainparams.rs

// Omitted: impl ChainParams {

pub fn get_assume_valid(network: Network, arg: AssumeValidArg) -> Option<BlockHash> {

match arg {

AssumeValidArg::Disabled => None,

AssumeValidArg::UserInput(hash) => Some(hash),

AssumeValidArg::Hardcoded => match network {

Network::Bitcoin => Some(bhash!(

"00000000000000000001ff36aef3a0454cf48887edefa3aab1f91c6e67fee294"

)),

Network::Testnet => Some(bhash!(

"000000007df22db38949c61ceb3d893b26db65e8341611150e7d0a9cd46be927"

)),

Network::Testnet4 => Some(bhash!(

"0000000000335c2895f02ebc75773d2ca86095325becb51773ce5151e9bcf4e0"

)),

Network::Signet => Some(bhash!(

"000000084ece77f20a0b6a7dda9163f4527fd96d59f7941fb8452b3cec855c2e"

)),

Network::Regtest => Some(bhash!(

"0f9188f13cb7b2c71f2a335e3a4fc328bf5beb436012afca590b1a11466e2206"

)),

},

}

}The final part of ChainState::new just returns the instance of ChainState with the ChainStateInner initialized. We will see this initialization next.

Initializing ChainStateInner

This is the struct that holds the meat of the matter (or should we say the root of the issue). As it's inside the spin::RwLock it can be read and modified in a thread-safe way. Its fields are:

acc: The accumulator, of typeStump(which comes from therustreexocrate).chainstore: Our implementation ofChainStore.best_block: Of typeBestChain.broadcast_queue: Holds a list of transactions to be broadcast, of typeVec<Transaction>.subscribers: A vector of trait objects (different types allowed) that implement theBlockConsumertrait, indicating they want to get notified when a new valid block arrives.fee_estimation: Fee estimation for the next 1, 10 and 20 blocks, as a tuple of three f64.ibd: A boolean indicating if we are in IBD.consensus: Parameters for the chain validation, as aConsensusstruct (a Floresta type that will be explained in detail in Chapter 4).assume_valid: As anOption<BlockHash>.

Note that the accumulator and the best block data are kept in our ChainStore, but we cache them in ChainStateInner for faster access, avoiding potential disk reads and deserializations (e.g., loading them from the meta bucket if we use KvChainStore).

Let's next see how these fields are accessed with an example.

Adding Subscribers to ChainState

As ChainState implements BlockchainInterface, it has a subscribe method to allow other types receive notifications.

The subscribers are stored in the ChainStateInner.subscribers field, but we need to handle the RwLock that wraps ChainStateInner for that.

Filename: pruned_utreexo/chain_state.rs

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

// Omitted: impl<PersistedState: ChainStore> BlockchainInterface for ChainState<PersistedState> {

fn subscribe(&self, tx: Arc<dyn BlockConsumer>) {

let mut inner = self.inner.write();

inner.subscribers.push(tx);

}This is the BlockchainInterface::subscribe implementation. We use the write method on the RwLock which then gives us exclusive access to the ChainStateInner.

When inner is dropped after the push, the lock is released and becomes available for other threads, which may acquire write access (one thread at a time) or read access (multiple threads simultaneously).

Initial ChainStateInner Values

In ChainState::new we initialize ChainStateInner like so:

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

pub fn new(

mut chainstore: PersistedState,

network: Network,

assume_valid: AssumeValidArg,

) -> ChainState<PersistedState> {

let parameters = network.into();

let genesis = genesis_block(¶meters);

chainstore

.save_header(&DiskBlockHeader::FullyValid(genesis.header, 0))

.expect("Error while saving genesis");

chainstore

.update_block_index(0, genesis.block_hash())

.expect("Error updating index");

let assume_valid = ChainParams::get_assume_valid(network, assume_valid);

// ...

ChainState {

inner: RwLock::new(ChainStateInner {

chainstore,

acc: Stump::new(),

best_block: BestChain {

best_block: genesis.block_hash(),

depth: 0,

validation_index: genesis.block_hash(),

alternative_tips: Vec::new(),

},

broadcast_queue: Vec::new(),

subscribers: Vec::new(),

fee_estimation: (1_f64, 1_f64, 1_f64),

ibd: true,

consensus: Consensus { parameters },

assume_valid,

}),

}

}The TLDR is that we move chainstore to the ChainStateInner, initialize the accumulator (Stump::new), initialize BestChain with the genesis block (being the best block and best validated block) and depth 0, initialize broadcast_queue and subscribers as empty vectors, set the minimum fee estimations, set ibd to true, use the Consensus parameters for the current Network and move the assume_valid optional hash in.

Recap

In this chapter we have understood the structure of ChainState, a type implementing UpdatableChainstate + BlockchainInterface; a blockchain backend. This type required a ChainStore implementation, expected to save state data to disk, and we examined the provided KvChainStore.

Finally, we have seen the ChainStateInner struct, which keeps track of the ChainStore and more data.

We can now build a ChainState as simply as:

fn main() {

let chain_store =

KvChainStore::new("./epic_location".to_string())

.expect("failed to open the blockchain database");

let chain = ChainState::new(

chain_store,

Network::Bitcoin,

AssumeValidArg::Disabled,

);

}State Transition and Validation

With the ChainState struct built, the foundation for running a node, we can now dive into the methods that validate and apply state transitions.

ChainState has 4 impl blocks (all located in pruned_utreexo/chain_state.rs):

- The

BlockchainInterfacetrait implementation - The

UpdatableChainstatetrait implementation - The implementation of other methods and associated functions like

ChainState::new - The conversion from

ChainStateBuilder(builder type located in pruned_utreexo/chain_state_builder.rs) toChainState

The entry point to the state transition and validation logic are the accept_header and connect_block methods from UpdatableChainstate. As we have explained previously, the first step in the IBD is accepting headers, so we will start with that.

Accepting Headers

The full accept_header method implementation for ChainState is below. To get read or write access to the ChainStateInner we use two macros, read_lock and write_lock.

In short, the method takes a bitcoin::block::Header (type alias BlockHeader) and accepts it on top of our header chain, or maybe reorgs if it's extending a better chain (i.e., switching to the new better chain). If there's an error it returns BlockchainError, which we mentioned in The UpdatableChainstate Trait subsection from Chapter 1.

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

fn accept_header(&self, header: BlockHeader) -> Result<(), BlockchainError> {

let disk_header = self.get_disk_block_header(&header.block_hash());

match disk_header {

Err(e @ BlockchainError::Database(_)) => {

// If there's a database error we don't know if we already

// have the header or not

return Err(e);

}

Ok(found) => {

// Possibly reindex to recompute the best_block field

self.maybe_reindex(&found)?;

// We already have this header

return Ok(());

}

_ => (),

}

// The best block we know of

let best_block = self.get_best_block()?;

// Do validation in this header

let block_hash = self.validate_header(&header)?;

// Update our current tip

if header.prev_blockhash == best_block.1 {

let height = best_block.0 + 1;

debug!("Header builds on top of our best chain");

write_lock!(self).best_block.new_block(block_hash, height);

let disk_header = DiskBlockHeader::HeadersOnly(header, height);

self.update_header_and_index(&disk_header, block_hash, height)?;

} else {

debug!("Header not in the best chain");

self.maybe_reorg(header)?;

}

Ok(())

}First, we check if we already have the header in our database. We query it with the get_disk_block_header method, which just wraps ChainStore::get_header in order to return BlockchainError (instead of T: DatabaseError).

If get_disk_block_header returns Err it may be because the header was not in the database or because there was a DatabaseError. In the latter case, we propagate the error.

We have the header

If we already have the header in our database we may reindex, which means recomputing the BestChain struct, and return Ok early.

Reindexing updates the

best_blockfield if it is not up-to-date with the disk headers (for instance, having headers up to the 105th, butbest_blockonly referencing the 100th). This happens when the node is turned off or crashes before persisting the latestBestChaindata.

We don't have the header

If we don't have the header, then we get the best block hash and height (with BlockchainInterface::get_best_block) and perform a simple validation on the header with validate_header. If validation passes, we potentially update the current tip.

- If the new header extends the previous best block:

- We update the

best_blockfield, adding the new block hash and height. - Then we call

save_headerandupdate_block_indexto update the database, via theupdate_header_and_indexhelper.

- We update the

- If the header doesn't extend the current best chain, we may reorg if it extends a better chain.

Reindexing

During IBD, headers arrive rapidly, making it pointless to write the BestChain data to disk for every new header. Instead, we update the ChainStateInner.best_block field and only persist it occasionally, avoiding redundant writes that would be instantly overridden.

But there is a possibility that the node is shut down or crashes before save_height is called (or before the pending write is completed) and after the headers have been written to disk. In this case we can recompute the last BestChain data by going through the headers on disk. This recovery process is handled by the reindex_chain method within maybe_reindex.

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

fn maybe_reindex(&self, potential_tip: &DiskBlockHeader) -> Result<(), BlockchainError> {

// Check if the disk header is an unvalidated block in the best chain

if let DiskBlockHeader::HeadersOnly(_, height) = potential_tip {

let best_height = self.get_best_block()?.0;

// If the best chain height is lower, it needs to be updated

if *height > best_height {

warn!("Found block with height {height}, while the current best chain is at {best_height}. Reindexing.");

self.reindex_chain()?;

}

}

Ok(())

}We call reindex_chain if disk header's height > best_block's height, as it means that best_block is not up to date with the headers on disk.

Validate Header

The validate_header method takes a BlockHeader and performs the following checks:

Check the header chain

- Retrieve the previous

DiskBlockHeader. If not found, returnBlockchainError::BlockNotPresentorBlockchainError::Database. - If the previous

DiskBlockHeaderis marked asOrphanorInvalidChain, returnBlockchainError::BlockValidation.

Check the PoW

- Use the

get_next_required_workmethod to compute the expected PoW target and compare it with the header's actual target. If the actual target is easier, returnBlockchainError::BlockValidation. - Check the special BIP 94 rules for the Testnet 4 network.

- Verify the PoW against the target using a

bitcoinmethod. If verification fails, returnBlockchainError::BlockValidation.

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

fn validate_header(&self, block_header: &BlockHeader) -> Result<BlockHash, BlockchainError> {

let prev_block = self.get_disk_block_header(&block_header.prev_blockhash)?;

let height = prev_block

.height()

.ok_or(BlockValidationErrors::BlockExtendsAnOrphanChain)?

+ 1;

// ...

// Check pow

let expected_target = self.get_next_required_work(&prev_block, height, block_header)?;

let actual_target = block_header.target();

if actual_target > expected_target {

return Err(BlockValidationErrors::NotEnoughPow)?;

}

self.check_bip94_block(block_header, height)?;

let block_hash = block_header

.validate_pow(actual_target)

.map_err(|_| BlockValidationErrors::NotEnoughPow)?;

Ok(block_hash)

}A block header passing this validation will not make the block itself valid, but we can use this to build the chain of headers with verified PoW.

Reorging the Chain

In the accept_header method we have seen that, when receiving a header that doesn't extend the best chain, we may reorg. This is done with the maybe_reorg method.

We have to choose between the two branches, represented by:

branch_tip: The last header from the alternative chain.current_tip: The last header from the current best chain.

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

fn maybe_reorg(&self, branch_tip: BlockHeader) -> Result<(), BlockchainError> {

let current_tip = self.get_block_header(&self.get_best_block()?.1)?;

self.check_branch(&branch_tip)?;

let current_work = self.get_branch_work(¤t_tip)?;

let new_work = self.get_branch_work(&branch_tip)?;

// If the new branch has more work, it becomes the new best chain

if new_work > current_work {

self.reorg(branch_tip)?;

return Ok(());

}

// If the new branch has less work, we just store it as an alternative branch

// that might become the best chain in the future.

self.push_alt_tip(&branch_tip)?;

let parent_height = self.get_ancestor(&branch_tip)?.try_height()?;

self.update_header(&DiskBlockHeader::InFork(branch_tip, parent_height + 1))?;

Ok(())

}We first call the check_branch method to check if we know all the branch_tip ancestors. In other words, we check if branch_tip is indeed part of a branch, which requires that no ancestor is Orphan.

Then we get the work in each chain tip with get_branch_work and do the following:

- We reorg to the

branch_tipif it has more work, and returnOkearly. - Else if

branch_tipdoesn't have more work we push its hash tobest_block.alternative_tipsvia thepush_alt_tipmethod and save the header asInFork.

The push_alt_tip method just checks if the branch_tip parent hash is in alternative_tips to remove it, as it's no longer the tip of the branch. Then we simply push the branch_tip hash.

Reorg

Let's now dig into reorg logic, with reorg. We start by querying the best block hash and use it to query its header. Then we get the header where the branch forks out with find_fork_point.

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

fn reorg(&self, new_tip: BlockHeader) -> Result<(), BlockchainError> {

let current_best_block = self.get_block_header(&self.get_best_block()?.1)?;

let fork_point = self.find_fork_point(&new_tip)?;

self.mark_chain_as_inactive(¤t_best_block, fork_point.block_hash())?;

self.mark_chain_as_active(&new_tip, fork_point.block_hash())?;

let validation_index = self.get_last_valid_block(&new_tip)?;

let depth = self.get_chain_depth(&new_tip)?;

self.change_active_chain(&new_tip, validation_index, depth);

self.reorg_acc(&fork_point)?;

Ok(())

}We use mark_chain_as_inactive and mark_chain_as_active to update the disk data (i.e., marking the previous InFork headers as HeadersOnly and vice versa, and linking the height indexes to the new branch block hashes).

Then we invoke get_last_valid_block and get_chain_depth to obtain said data from a branch, provided the branch header tip.

Note that we don't validate forks unless they become the best chain, so in this case the last validated block is the last common block between the two branches.

With this data we call change_active_chain to update the best_block field. We also call reorg_acc to roll back to the saved accumulator for the new last validated block, which is needed to proceed with the new branch validation.

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

fn change_active_chain(&self, new_tip: &BlockHeader, last_valid: BlockHash, depth: u32) {

let mut inner = self.inner.write();

inner.best_block.best_block = new_tip.block_hash();

inner.best_block.validation_index = last_valid;

inner.best_block.depth = depth;

}Connecting Blocks

Great! At this point we should have a sense of the inner workings of accept_headers. Let's now understand the connect_block method, which performs the actual block validation and updates the ChainStateInner fields and database. This function is meant to increase our chain validation index, and so it requires to be called on the right block (i.e., the next one to validate).

connect_block takes a Block, an UTXO set inclusion Proof from rustreexo, the UTXOs to spend (stored with metadata in a custom floresta type called UtxoData) and the hashes from said outputs. If result is Ok the function returns the height.

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

fn connect_block(

&self,

block: &Block,

proof: Proof,

inputs: HashMap<OutPoint, UtxoData>,

del_hashes: Vec<sha256::Hash>,

) -> Result<u32, BlockchainError> {

let header = self.get_disk_block_header(&block.block_hash())?;

let height = match header {

DiskBlockHeader::FullyValid(_, height) => {

let validation_index = self.get_validation_index()?;

// If this block is not our validation index, but the caller is trying to connect

// it, this is a logical error, and we will have spurious errors, specially with

// invalid proof. They don't mean the block is invalid, just that we are using the

// wrong accumulator, since we are not processing the right block.

if height != validation_index {

return Err(BlockValidationErrors::BlockDoesntExtendTip)?;

}

// If this block is our validation index, but it's fully valid, this clearly means

// there was some corruption of our state. If we don't process this block, we will

// be stuck forever.

//

// Note: You may think "just kick the validation index one block further and we are

// good". But this is not the case, because we still need to update our

// accumulator. Otherwise, the next block will always have an invalid proof

// (because the accumulator is not updated).

height

},

// Our called tried to connect_block on a block that is not the next one in our chain

DiskBlockHeader::Orphan(_)

| DiskBlockHeader::AssumedValid(_, _) // this will be validated by a partial chain

| DiskBlockHeader::InFork(_, _)

| DiskBlockHeader::InvalidChain(_) => return Err(BlockValidationErrors::BlockExtendsAnOrphanChain)?,

DiskBlockHeader::HeadersOnly(_, height) => {

let validation_index = self.get_validation_index()?;

// In case of a `HeadersOnly` block, we need to check if the height is

// the next one after the validation index. If not, we would be trying to

// connect a block where our accumulator isn't the right one. So the proof will

// always be invalid.

if height != validation_index + 1 {

return Err(BlockValidationErrors::BlockDoesntExtendTip)?;

}

height

}

};

// Clone inputs only if a subscriber wants spent utxos

let inputs_for_notifications = self

.inner

.read()

.subscribers

.iter()

.any(|subscriber| subscriber.wants_spent_utxos())

.then(|| inputs.clone());

self.validate_block_no_acc(block, height, inputs)?;

let acc = Consensus::update_acc(&self.acc(), block, height, proof, del_hashes)?;

self.update_view(height, &block.header, acc)?;

info!(

"New tip! hash={} height={height} tx_count={}",

block.block_hash(),

block.txdata.len()

);

#[cfg(feature = "metrics")]

metrics::get_metrics().block_height.set(height.into());

if !self.is_in_ibd() || height % 100_000 == 0 {

self.flush()?;

}

// Notify others we have a new block

self.notify(block, height, inputs_for_notifications.as_ref());

Ok(height)

}When we call connect_block, the header should already be stored on disk, as accept_header is called first. Then we will verify we are calling the function for the right block.

If the header is FullyValid it means we already validated the block, and we only try to re-connect the block if it's the last validated block (i.e., the validation index), which could be needed if some of our data was lost. Else if the header is Orphan, AssumeValid, InFork or InvalidChain we return an error, as this means our block is not mainchain or doesn't require validation.

If header is HeadersOnly, meaning the block is an unvalidated mainchain block, we will check it is the next one to validate. Thus, if we validated up to block h, then we must call connect_block for block h + 1 (this is because we can only use the accumulator at height h to validate the block h + 1).

So, when block is the next block to validate, or it is the validation index, we go on to validate it using validate_block_no_acc, and then the Consensus::update_acc function, which verifies the inclusion proof against the accumulator and returns the updated accumulator.

After this, we have fully validated the block! The next steps in connect_block are updating the state and notifying the block to subscribers.

Post-Validation

After block validation we call update_view to mark the disk header as FullyValid (ChainStore::save_header), update the block hash index (ChainStore::update_block_index) and also update ChainStateInner.acc and the validation index of best_block.

Then, we call UpdatableChainstate::flush every 100,000 blocks during IBD or for each new block once synced. In order, this method invokes:

save_acc, which serializes the accumulator and callsChainStore::save_rootsChainStore::save_heightChainStore::flush, to immediately flush to disk all pending writes

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

fn flush(&self) -> Result<(), BlockchainError> {

let mut inner = write_lock!(self);

let best_block = inner.best_block.clone();

inner.chainstore.save_height(&best_block)?;

inner.chainstore.flush()?;

Ok(())

}Last of all, we notify the new validated block to subscribers.

Block Validation

We have arrived at the final part of this chapter! Here we understand the validate_block_no_acc method that we used in connect_block, and lastly do a recap of everything.

validate_block_no_accis also used inside theBlockchainInterface::validate_blocktrait method implementation, as it encapsulates the non-utreexo validation logic.The difference between

UpdatableChainstate::connect_blockandBlockchainInterface::validate_blockis that the first is used during IBD, while the latter is a tool that allows the node user to validate blocks without affecting the node state.

The method returns BlockchainError::BlockValidation, wrapping many different BlockValidationErrors, which is an enum declared in pruned_utreexo/errors.rs.

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

pub fn validate_block_no_acc(

&self,

block: &Block,

height: u32,

inputs: HashMap<OutPoint, UtxoData>,

) -> Result<(), BlockchainError> {

if !block.check_merkle_root() {

return Err(BlockValidationErrors::BadMerkleRoot)?;

}

let bip34_height = self.chain_params().params.bip34_height;

// If bip34 is active, check that the encoded block height is correct

if height >= bip34_height && self.get_bip34_height(block) != Some(height) {

return Err(BlockValidationErrors::BadBip34)?;

}

if !block.check_witness_commitment() {

return Err(BlockValidationErrors::BadWitnessCommitment)?;

}

if block.weight().to_wu() > 4_000_000 {

return Err(BlockValidationErrors::BlockTooBig)?;

}

// Validate block transactions

let subsidy = read_lock!(self).consensus.get_subsidy(height);

let verify_script = self.verify_script(height)?;

#[cfg(feature = "bitcoinconsensus")]

let flags = read_lock!(self)

.consensus

.parameters

.get_validation_flags(height, block.block_hash());

#[cfg(not(feature = "bitcoinconsensus"))]

let flags = 0;

Consensus::verify_block_transactions(

height,

inputs,

&block.txdata,

subsidy,

verify_script,

flags,

)?;

Ok(())

}In order, we do the following things:

- Call

check_merkle_rooton theblock, to check that the merkle root commits to all the transactions. - Check that if the height is greater or equal than that of the activation of BIP 34, the coinbase transaction encodes the height as specified.

- Call

check_witness_commitment, to check that thewtxidmerkle root is included in the coinbase transaction as per BIP 141. - Finally, check that the block weight doesn't exceed the 4,000,000 weight unit limit.

Lastly, we go on to validate the transactions. We retrieve the current subsidy (the newly generated coins) with the get_subsidy method on our Consensus struct. We also call the verify_script method which returns a boolean flag indicating if we are NOT inside the Assume-Valid range.

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

fn verify_script(&self, height: u32) -> Result<bool, PersistedState::Error> {

let inner = self.inner.read();

match inner.assume_valid {

Some(hash) => {

match inner.chainstore.get_header(&hash)? {

// If the assume-valid block is in the best chain, only verify scripts if we are higher

Some(DiskBlockHeader::HeadersOnly(_, assume_h))

| Some(DiskBlockHeader::FullyValid(_, assume_h)) => Ok(height > assume_h),

// Assume-valid is not in the best chain, so verify all the scripts

_ => Ok(true),

}

}

None => Ok(true),

}

}We also get the validation flags for the current height with get_validation_flags, only if the bitcoinconsensus feature is active. These flags are used to validate transactions taking into account the different consensus rules that have been added over time.

And lastly we call the Consensus::verify_block_transactions associated function.

Recap

In this chapter we have seen many of the methods for the ChainState type, mainly related to the chain validation and state transition process.

We started with accept_header, that checked if the header was in the database or not. If it was in the database we just called maybe_reindex. If it was not, we validated it and potentially updated the chain tip, either by extending it or by reorging. We also called ChainStore::save_header and ChainStore::update_block_index.

Then we saw connect_block, which validated the next block in the chain with validate_block_no_acc. If block was valid, we may invoke UpdatableChainstate::flush to persist all data (including the data saved with ChainStore::save_roots and ChainStore::save_height) and also marked the disk header as FullyValid. Finally, we notified the new block.

Consensus and bitcoinconsensus

In the previous chapter, we saw that the block validation process involves two associated functions from Consensus:

verify_block_transactions: The last check performed insidevalidate_block_no_acc, after having validated the two merkle roots and height commitment.update_acc: Called insideconnect_block, just aftervalidate_block_no_acc, to verify the utreexo proof and get the updated accumulator.

The Consensus struct only holds a parameters field (as we saw when we initialized ChainStateInner) and provides a few core consensus functions. In this chapter we are going to see the two mentioned functions and discuss the details of how we verify scripts.

Filename: pruned_utreexo/consensus.rs

// Path: floresta-chain/src/pruned_utreexo/consensus.rs

pub struct Consensus {

// The chain parameters are in the chainparams.rs file

pub parameters: ChainParams,

}bitcoinconsensus

Consensus::verify_block_transactions is a critical part of Floresta, as it validates all the transactions in a block. One of the hardest parts for validation is checking the script satisfiability, that is, verifying whether the inputs can indeed spend the coins. It's also the most resource-intensive part of block validation, as it requires verifying many digital signatures.

Implementing a Bitcoin script interpreter is challenging, and given the complexity of both C++ and Rust, we cannot be certain that it will always behave in the same way as Bitcoin Core. This is problematic because if our Rust implementation rejects a script that Bitcoin Core accepts, our node will fork from the network. It will treat subsequent blocks as invalid, halting synchronization with the chain and being unable to continue tracking the user balance.

Partly because of this reason, in 2015 the script validation logic of Bitcoin Core was extracted and placed into the libbitcoin-consensus library. This library includes 35 files that are identical to those of Bitcoin Core. Subsequently, the library API was bound to Rust in rust-bitcoinconsensus, which serves as the bitcoinconsensus feature-dependency in the bitcoin crate.

If this feature is set, bitcoin provides a verify_with_flags method on Transaction, which performs the script validation by calling C++ code extracted from Bitcoin Core. Floresta uses this method to verify scripts.

libbitcoinkernel

bitcoinconsensushandles only script validation and is maintained as a separate project fromBitcoin Core, with limited upkeep.To address these shortcomings there's an ongoing effort within the

Bitcoin Corecommunity to extract the whole consensus engine into a library. This is known as the libbitcoinkernel project.Once this is achieved, we should be able to drop all the consensus code in Floresta and replace the

bitcoinconsensusdependency with the Rust bindings for the new library. This would make Floresta safer and more reliable as a full node.

Transaction Validation

Let's now dive into Consensus::verify_block_transactions, to see how we verify the transactions in a block. As we saw in the Block Validation section from last chapter, this function takes the height, the UTXOs to spend, the spending transactions, the current subsidy, the verify_script boolean (which was only true when we are not in the Assume-Valid range) and the validation flags.

// Path: floresta-chain/src/pruned_utreexo/chain_state.rs

pub fn validate_block_no_acc(

&self,

block: &Block,

height: u32,

inputs: HashMap<OutPoint, UtxoData>,

) -> Result<(), BlockchainError> {

if !block.check_merkle_root() {

return Err(BlockValidationErrors::BadMerkleRoot)?;

}

let bip34_height = self.chain_params().params.bip34_height;

// If bip34 is active, check that the encoded block height is correct

if height >= bip34_height && self.get_bip34_height(block) != Some(height) {

return Err(BlockValidationErrors::BadBip34)?;

}

if !block.check_witness_commitment() {

return Err(BlockValidationErrors::BadWitnessCommitment)?;

}

if block.weight().to_wu() > 4_000_000 {

return Err(BlockValidationErrors::BlockTooBig)?;

}

// Validate block transactions

let subsidy = read_lock!(self).consensus.get_subsidy(height);

let verify_script = self.verify_script(height)?;

// ...

#[cfg(feature = "bitcoinconsensus")]

let flags = read_lock!(self)

.consensus

.parameters

.get_validation_flags(height, block.block_hash());

#[cfg(not(feature = "bitcoinconsensus"))]

let flags = 0;

Consensus::verify_block_transactions(

height,

inputs,

&block.txdata,

subsidy,

verify_script,

flags,

)?;

Ok(())

}Validation Flags

The validation flags were returned by get_validation_flags based on the current height and block hash, and they are of type core::ffi::c_uint: a foreign function interface type used for the C++ bindings.

// Path: floresta-chain/src/pruned_utreexo/chainparams.rs

// Omitted: impl ChainParams {

#[cfg(feature = "bitcoinconsensus")]

/// Returns the validation flags for a given block hash and height

pub fn get_validation_flags(&self, height: u32, hash: BlockHash) -> c_uint {

if let Some(flag) = self.exceptions.get(&hash) {

return *flag;

}

// From Bitcoin Core:

// BIP16 didn't become active until Apr 1 2012 (on mainnet, and

// retroactively applied to testnet)

// However, only one historical block violated the P2SH rules (on both

// mainnet and testnet).

// Similarly, only one historical block violated the TAPROOT rules on

// mainnet.

// For simplicity, always leave P2SH+WITNESS+TAPROOT on except for the two

// violating blocks.

let mut flags = bitcoinconsensus::VERIFY_P2SH | bitcoinconsensus::VERIFY_WITNESS;

if height >= self.params.bip65_height {

flags |= bitcoinconsensus::VERIFY_CHECKLOCKTIMEVERIFY;

}

if height >= self.params.bip66_height {

flags |= bitcoinconsensus::VERIFY_DERSIG;

}

if height >= self.csv_activation_height {

flags |= bitcoinconsensus::VERIFY_CHECKSEQUENCEVERIFY;

}

if height >= self.segwit_activation_height {

flags |= bitcoinconsensus::VERIFY_NULLDUMMY;

}

flags

}The flags cover the following consensus rules added to Bitcoin over time:

- P2SH (BIP 16): Activated at height 173,805

- Enforce strict DER signatures (BIP 66): Activated at height 363,725

- CHECKLOCKTIMEVERIFY (BIP 65): Activated at height 388,381

- CHECKSEQUENCEVERIFY (BIP 112): Activated at height 419,328

- Segregated Witness (BIP 141) and Null Dummy (BIP 147): Activated at height 481,824

Verify Block Transactions

Now, the Consensus::verify_block_transactions function has this body, which in turn calls Consensus::verify_transaction:

Filename: pruned_utreexo/consensus.rs

// Path: floresta-chain/src/pruned_utreexo/consensus.rs

// Omitted: impl Consensus {

/// Verify if all transactions in a block are valid. Here we check the following:

/// - The block must contain at least one transaction, and this transaction must be coinbase

/// - The first transaction in the block must be coinbase

/// - The coinbase transaction must have the correct value (subsidy + fees)

/// - The block must not create more coins than allowed

/// - All transactions must be valid, as verified by [`Consensus::verify_transaction`]

#[allow(unused)]

pub fn verify_block_transactions(

height: u32,

mut utxos: HashMap<OutPoint, UtxoData>,

transactions: &[Transaction],

subsidy: u64,

verify_script: bool,

flags: c_uint,

) -> Result<(), BlockchainError> {

// Blocks must contain at least one transaction (i.e., the coinbase)

if transactions.is_empty() {

return Err(BlockValidationErrors::EmptyBlock)?;

}

// Total block fees that the miner can claim in the coinbase

let mut fee = 0;

for (n, transaction) in transactions.iter().enumerate() {

if n == 0 {

if !transaction.is_coinbase() {

return Err(BlockValidationErrors::FirstTxIsNotCoinbase)?;

}

Self::verify_coinbase(transaction)?;

// Skip next checks: coinbase input is exempt, coinbase reward checked later

continue;

}

// Actually verify the transaction

let (in_value, out_value) =

Self::verify_transaction(transaction, &mut utxos, height, verify_script, flags)?;

// Fee is the difference between inputs and outputs

fee += in_value - out_value;

}

// Check coinbase output values to ensure the miner isn't producing excess coins

let allowed_reward = fee + subsidy;

let coinbase_total: u64 = transactions[0]

.output

.iter()

.map(|out| out.value.to_sat())

.sum();

if coinbase_total > allowed_reward {

return Err(BlockValidationErrors::BadCoinbaseOutValue)?;

}

Ok(())

}

/// Verifies a single, non-coinbase transaction. To verify (the structure of) a coinbase

/// transaction, use [`Consensus::verify_coinbase`].

///

/// This function checks that the transaction:

/// - Has at least one input and one output

/// - Doesn't have null PrevOuts (reserved only for coinbase transactions)

/// - Doesn't spend more coins than it claims in the inputs

/// - Doesn't "move" more coins than allowed (at most 21 million)

/// - Spends mature coins, in case any input refers to a coinbase transaction

/// - Has valid scripts (if we don't assume them), and within the allowed size

pub fn verify_transaction(

transaction: &Transaction,

utxos: &mut HashMap<OutPoint, UtxoData>,

height: u32,

_verify_script: bool,

_flags: c_uint,

) -> Result<(u64, u64), BlockchainError> {

let txid = || transaction.compute_txid();

if transaction.input.is_empty() {

return Err(tx_err!(txid, EmptyInputs))?;

}

if transaction.output.is_empty() {

return Err(tx_err!(txid, EmptyOutputs))?;

}

let out_value: u64 = transaction

.output

.iter()

.map(|out| out.value.to_sat())

.sum();

let mut in_value = 0;

for input in transaction.input.iter() {

// Null PrevOuts are only allowed in coinbase inputs

if input.previous_output.is_null() {

return Err(tx_err!(txid, NullPrevOut))?;

}

let utxo = Self::get_utxo(input, utxos, txid)?;

let txout = &utxo.txout;

// A coinbase output created at height n can only be spent at height >= n + 100

if utxo.is_coinbase && (height < utxo.creation_height + 100) {

return Err(tx_err!(txid, CoinbaseNotMatured))?;

}

// Check script sizes (spent txo pubkey, and current tx scriptsig and TODO witness)

Self::validate_script_size(&txout.script_pubkey, txid)?;

Self::validate_script_size(&input.script_sig, txid)?;

// TODO check also witness script size

in_value += txout.value.to_sat();

}

// Value in should be greater or equal to value out. Otherwise, inflation.

if out_value > in_value {

return Err(tx_err!(txid, NotEnoughMoney))?;

}

// Sanity check

if out_value > 21_000_000 * COIN_VALUE {

return Err(BlockValidationErrors::TooManyCoins)?;

}

// Verify the tx script

#[cfg(feature = "bitcoinconsensus")]

if _verify_script {

transaction

.verify_with_flags(

|outpoint| utxos.remove(outpoint).map(|utxo| utxo.txout),

_flags,

)

.map_err(|e| tx_err!(txid, ScriptValidationError, format!("{e:?}")))?;

};

Ok((in_value, out_value))

}In general, the function behavior is well explained in the comments. Something to note is that we need the bitcoinconsensus feature set in order to compile verify_with_flags and verify the transaction scripts. Because this method is just a C++ call behind the scenes, we make it optional for some architectures to compile the Floresta crates without C++ support.

We also don't validate if verify_script is false, but this is because the Assume-Valid process has already assessed the scripts as valid.

Note that these consensus checks are far from complete. More checks will be added in the short term, but once libbitcoinkernel bindings are ready this function will instead use them.

Utreexo Validation

In the previous section we have seen the Consensus::verify_block_transactions function. It was taking a utxos argument, used to verify that each transaction input satisfies the script of the UTXO it spends, and that transactions spend no more than the sum of input amounts.

However, we have yet to verify that these utxos actually exist in the UTXO set, i.e., check that nobody is spending coins out of thin air. That's what we are going to do inside Consensus::update_acc, and get the updated UTXO set accumulator, with spent UTXOs removed and new ones added.

Recall that

Stumpis the type of our accumulator, coming from therustreexocrate.Stumprepresents the merkle roots of a forest where leaves are UTXO hashes.

Figure 4: A visual depiction of the utreexo forest. To prove that UTXO 4 is part of the set we provide the hash of UTXO 3 and h1. With this data we can re-compute the h5 root, which must be identical. Credit: original utreexo post.

This function first verifies we are not spending any of the two historical unspendable UTXOs according to BIP 30. In the early days of Bitcoin there were two occurrences of duplicate transaction IDs, which override the previous UTXO with the same transaction ID. Because utreexo leaf hashes commit to more data, we don't see the previous UTXO as overridden, so we need to enforce it is unspendable manually.

// Path: floresta-chain/src/pruned_utreexo/consensus.rs

// Omitted: impl Consensus {

pub fn update_acc(

acc: &Stump,

block: &Block,

height: u32,

proof: Proof,

del_hashes: Vec<sha256::Hash>,

) -> Result<Stump, BlockchainError> {

let block_hash = block.block_hash();

// Check if there is a spend of an unspendable UTXO (BIP30)

if Self::contains_unspendable_utxo(&del_hashes) {

return Err(BlockValidationErrors::UnspendableUTXO)?;

}

// Convert to BitcoinNodeHash, from rustreexo

let del_hashes: Vec<_> = del_hashes.into_iter().map(Into::into).collect();

let adds = udata::proof_util::get_block_adds(block, height, block_hash);

// Update the accumulator

let acc = acc.modify(&adds, &del_hashes, &proof)?.0;

Ok(acc)

}Then we get the new leaf hashes (the hashes of newly created UTXOs in the block) by calling udata::proof_util::get_block_adds. This function returns the new leaves to add to the accumulator, which exclude two cases:

- Created UTXOs that are provably unspendable (i.e., an OP_RETURN output or any output with a script larger than 10,000 bytes).

- Created UTXOs spent within the same block.

Finally, we get the updated Stump using its modify method, provided the leaves to add, the leaves to remove and the proof of inclusion for the latter. This method both verifies the proof and generates the new accumulator.

Advanced Chain Validation Methods

Before moving on from floresta-chain to floresta-wire, it's time to understand some advanced validation methods and another Chain backend provided by Floresta: the PartialChainState.

Although similar to ChainState, PartialChainState focuses on validating a limited range of blocks rather than the entire chain. This design is closely linked to concepts like UTXO snapshots (precomputed UTXO states at specific blocks) and, in particular, out-of-order validation, which we’ll explore below.

Out-of-Order Validation

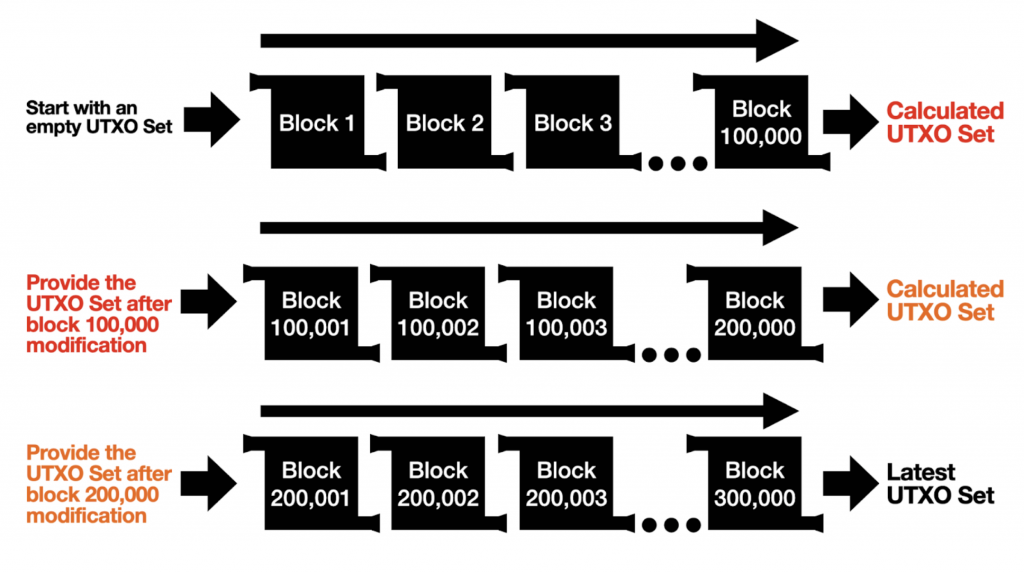

One of the most powerful features enabled by utreexo is out-of-order validation, which allows block intervals to be validated independently if we know the utreexo roots at the start of each interval.

In traditional IBDs, block validation is inherently sequential: a block at height h depends on the UTXO set resulting from block h - 1, which in turn depends on h - 2, and so forth. However, with UTXO set snapshots for specific blocks, validation can become non-linear.

Figure 5: Visual explanation of three block intervals, starting at block 1, 100,001, and 200,001, that can be validated in parallel if we have the UTXO sets for those blocks. Credit: original post from Calvin Kim.

This process remains fully trustless because, at the end, we verify that the resulting UTXO set from one interval matches the UTXO set snapshot used to start the next. For example, in the image, the UTXO set after block 100,000 must match the set used for the interval beginning at block 100,001, and so on.

Ultimately, the sequential nature of block validation is preserved. The worst outcome is wasting resources if the UTXO snapshots are incorrect, so it's still important to obtain these snapshots from a reliable source, such as hardcoded values within the software or reputable peers.

Out-of-Order Validation Without Utreexo

Out-of-order validation is technically possible without utreexo, but it would require entire UTXO sets for each interval, which would take many gigabytes.

Utreexo makes this feasible with compact accumulators, avoiding the need for full UTXO set storage and frequent disk reads. Instead, spent UTXOs are fetched on demand from the network, along with their inclusion proofs.

Essentially, we are trading disk operations for hash computations (by verifying merkle proofs and updating roots), along with a slightly higher network data demand. In other words, utreexo enables parallel validation while avoiding the bottleneck of slow disk access.

Trusted UTXO Set Snapshots

A related but slightly different concept is the Assume-Utxo feature in Bitcoin Core, which hardcodes a trusted, recent UTXO set hash. When a new node starts syncing, it downloads the corresponding UTXO set from the network, verifies its hash against the hardcoded value, and temporarily assumes it to be valid. Starting from this snapshot, the node can quickly sync to the chain tip (e.g., if the snapshot is from block 850,000 and the tip is at height 870,000, only 20,000 blocks need to be validated to get a synced node).

This approach bypasses most of the IBD time, enabling rapid node synchronization while still silently completing IBD in the background to fully validate the UTXO set snapshot. It builds on the Assume-Valid concept, relying on the open-source process to ensure the correctness of hardcoded UTXO set hashes.

This idea, adapted to Floresta, is what we call Assume-Utreexo, a hardcoded UTXO snapshot in the form of utreexo roots. These hardcoded values are located in pruned_utreexo/chainparams.rs, alongside the Assume-Valid hashes.

// Path: floresta-chain/src/pruned_utreexo/chainparams.rs

impl ChainParams {

pub fn get_assume_utreexo(network: Network) -> AssumeUtreexoValue {

let genesis = genesis_block(Params::new(network));

match network {

Network::Bitcoin => AssumeUtreexoValue {

block_hash: bhash!(

"0000000000000000000239f2b7f982df299193bdd693f499e6b893d8276ab7ce"

),

height: 902967,

roots: acchashes![

"bd53eef66849c9d3ca13b62ce694030ac4d4b484c6f490f473b9868a7c5df2e8",

"993ffb1782db628c18c75a5edb58d4d506167d85ca52273e108f35b73bb5b640",

"36d8c4ba5176c816bdae7c4119d9f2ea26a1f743f5e6e626102f66a835eaac6d",

"4c93092c1ecd843d2b439365609e7f616fe681de921a46642951cb90873ba6ce",

"9b4435987e18e1fe4efcb6874bba5cdc66c3e3c68229f54624cb6343787488a4",

"ab1e87c4066bf195fa7b8357874b82de4fa09ddba921499d6fc73aa133200505",

"8f8215e284dbce604988755ba3c764dbfa024ae0d9659cd67b24742f46360849",

"09b5057a8d6e1f61e93baf474220f581bd1a38d8a378dacb5f7fdec532f21e00",

"a331072d7015c8d33a5c17391264a72a7ca1c07e1f5510797064fced7fbe591d",

"c1c647289156980996d9ea46377e8c1b7e5c05940730ef8c25c0d081341221b5",

"330115a495ed14140cd785d44418d84b872480d293972abd66e3325fdc78ac93",

"b1d7a488e1197908efb2091a3b750508cb2fc495d2011bf2c34c5ae2d40bd2a5",

"3b3b2e51ad96e1ae8ce468c7947b8aa2b41ecb400a32edec3dbcfe5ddb9aca50",

"9d852775775f4c1e4a150404776a6b22569a0fe31f2e669fd3b31a0f70072800",

"8e5f6a92169ad67b3f2682f230e2a62fc849b0a47bc36af8ce6cae24a5343126",

"6dbd2925f8aa0745ac34fc9240ce2a7ef86953fc305c6570ef580a0763072bbe",

"8121c38dcb37684c6d50175f5fd2695af3b12ce0263d20eb7cc503b96f7dba0d",

"f5d8b30dd2038e1b3a5ced7a30c961e230270020c336fb649d0a9e169f11b876",

"0466bd4eb9e7be5b8870e97d2a66377525391c16f15dbcc3833853c8d3bae51e",

"976184c55f74cbb780938a20e2a5df2791cf51e712f68a400a6b024c77ad78e4",

]

.to_vec(),

leaves: 2860457445,

},

Network::Testnet => AssumeUtreexoValue {

// ...

block_hash: genesis.block_hash(),

height: 0,

leaves: 0,

roots: Vec::new(),

},

Network::Testnet4 => AssumeUtreexoValue {

// ...

block_hash: genesis.block_hash(),

height: 0,

leaves: 0,

roots: Vec::new(),

},

Network::Signet => AssumeUtreexoValue {

// ...

block_hash: genesis.block_hash(),

height: 0,

leaves: 0,

roots: Vec::new(),

},

Network::Regtest => AssumeUtreexoValue {

// ...

block_hash: genesis.block_hash(),

height: 0,

leaves: 0,

roots: Vec::new(),

},

}

}

// ...

pub fn get_assume_valid(network: Network, arg: AssumeValidArg) -> Option<BlockHash> {

match arg {

AssumeValidArg::Disabled => None,

AssumeValidArg::UserInput(hash) => Some(hash),

AssumeValidArg::Hardcoded => match network {

Network::Bitcoin => Some(bhash!(

"00000000000000000001ff36aef3a0454cf48887edefa3aab1f91c6e67fee294"

)),

Network::Testnet => Some(bhash!(

"000000007df22db38949c61ceb3d893b26db65e8341611150e7d0a9cd46be927"

)),

Network::Testnet4 => Some(bhash!(

"0000000000335c2895f02ebc75773d2ca86095325becb51773ce5151e9bcf4e0"

)),

Network::Signet => Some(bhash!(

"000000084ece77f20a0b6a7dda9163f4527fd96d59f7941fb8452b3cec855c2e"

)),

Network::Regtest => Some(bhash!(